Objective

One of the objectives of the 2016 FRC game was to shoot foam balls through a small opening more than 7 feet up a tower. In order to increase accuracy and precision, computer vision must be used to auto-align and auto-shoot to the goal.

Figure 1: Two of the three faces of the high tower goal. Strips of retroreflective tape are placed in a ‘u’ shape around each opening.

Figure 1: Two of the three faces of the high tower goal. Strips of retroreflective tape are placed in a ‘u’ shape around each opening.

Jetson TK1

Unfortunately, merely locating the tower goal is a very difficult and costly task. The code uses several filters (like the ones on snapchat or instagram) that must go through every single pixel of the image, and at the standard size of 640 by 480, each image will have 307,200 pixels!. Pair this with the fact that you would ideally want between 10-30 frames per second (30-100 milliseconds per image) and performance becomes a serious issue.

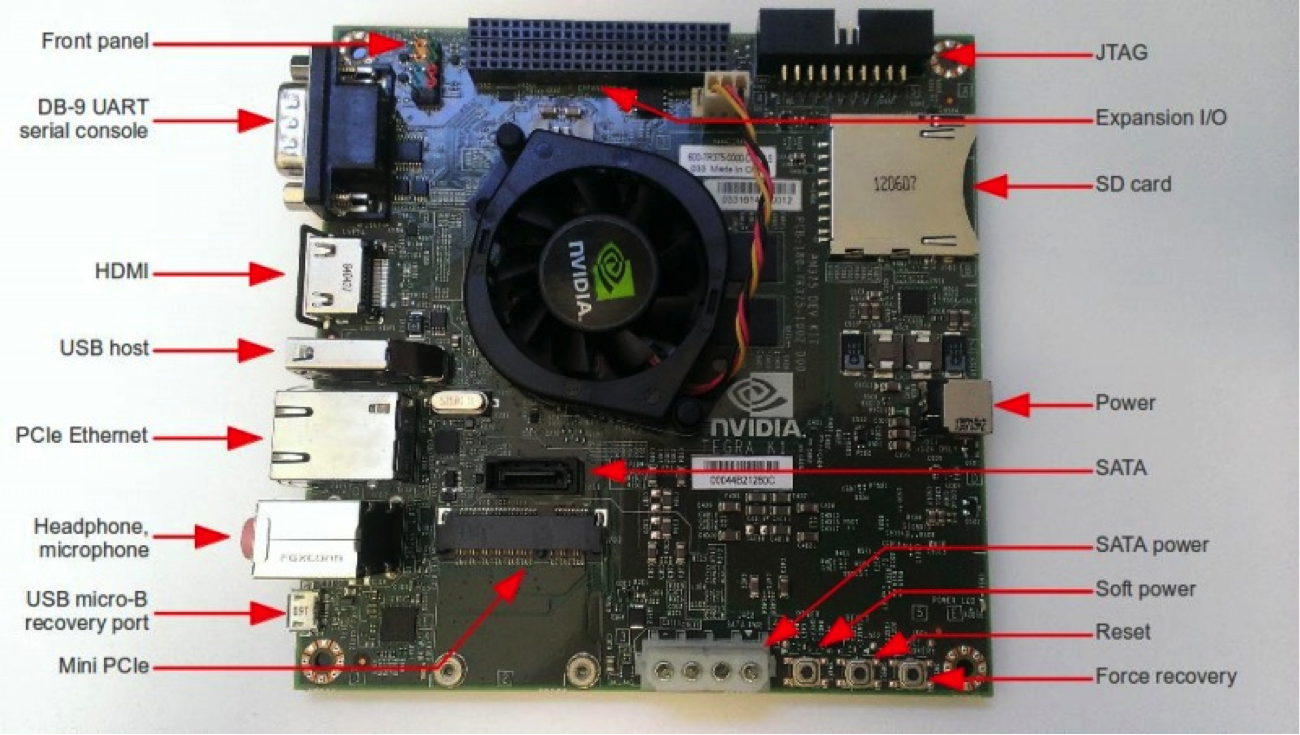

Luckily, in our blessed era that we live in today, companies like NVIDIA are addressing such issues. In particular, our team uses the Jetson TK1 that came out recently in mid 2014.The Jetson TK1 is a powerful development board hosting a Tegra K1 SOC (essentially a computer processor), enabling it to run both CPU and GPU optimized code. GPGPUs, like the Jetson TK1, are well suited for computer vision tasks because the convolutions across matrices (more on this later) can be split across the hundreds of cores on the GPU.

In other words, the Jetson TK1 makes computer vision much faster than on a normal computer (not to mention a board like the Raspberry Pi), so we can actually use it on the robot!

Figure 2: Jetson TK1 with labeled components.

Figure 2: Jetson TK1 with labeled components.